Feature Alignment: Rethinking Efficient Active Learning via Proxy in the Context of Pre-trained Models

Published in TMLR, 2024

Key observations:

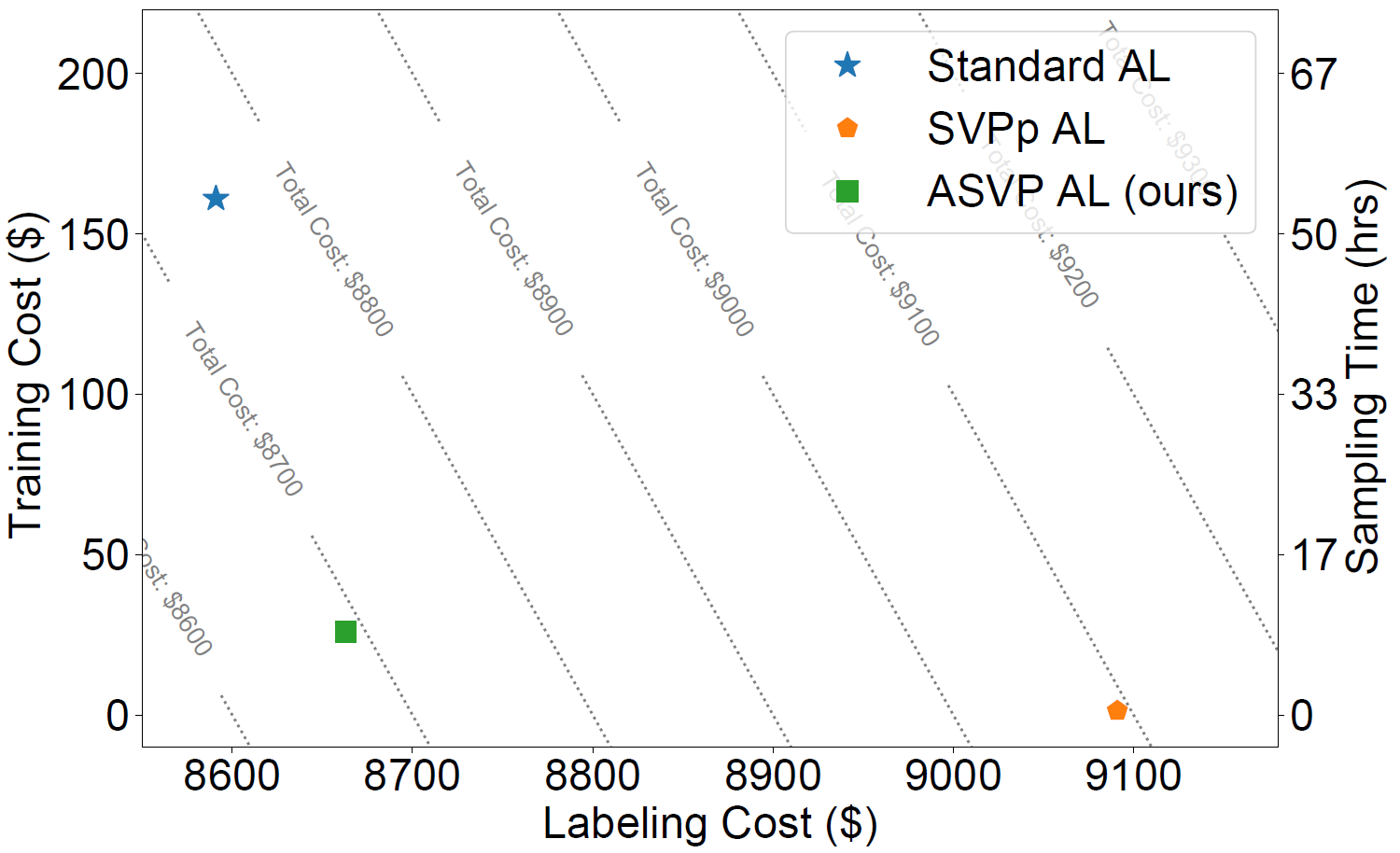

- Active learning based on a proxy model (an MLP classifier with pre-trained feature inputs) can improve computational efficiency but may reduce AL accuracy and lead to higher overall costs,

- Not all differences in sample selection between the proxy model and the fine-tuned model contribute to differences in Active Learning performance,

- When the number of labeled samples is small, adopting LP-FT (linear probing followed by fine-tuning) for final model training can help mitigate AL performance gaps. However, when more labels are available, fine-tuning is necessary to update the pre-computed features.

Recommended citation: Ziting Wen, Oscar Pizarro, and Stefan B. Williams. "Feature Alignment: Rethinking Efficient Active Learning via Proxy in the Context of Pre-trained Models." Transactions on Machine Learning Research (2024).

Download Paper