Active self-semi-supervised learning for few labeled samples

Published in Neurocomputing, 2024

Key observations:

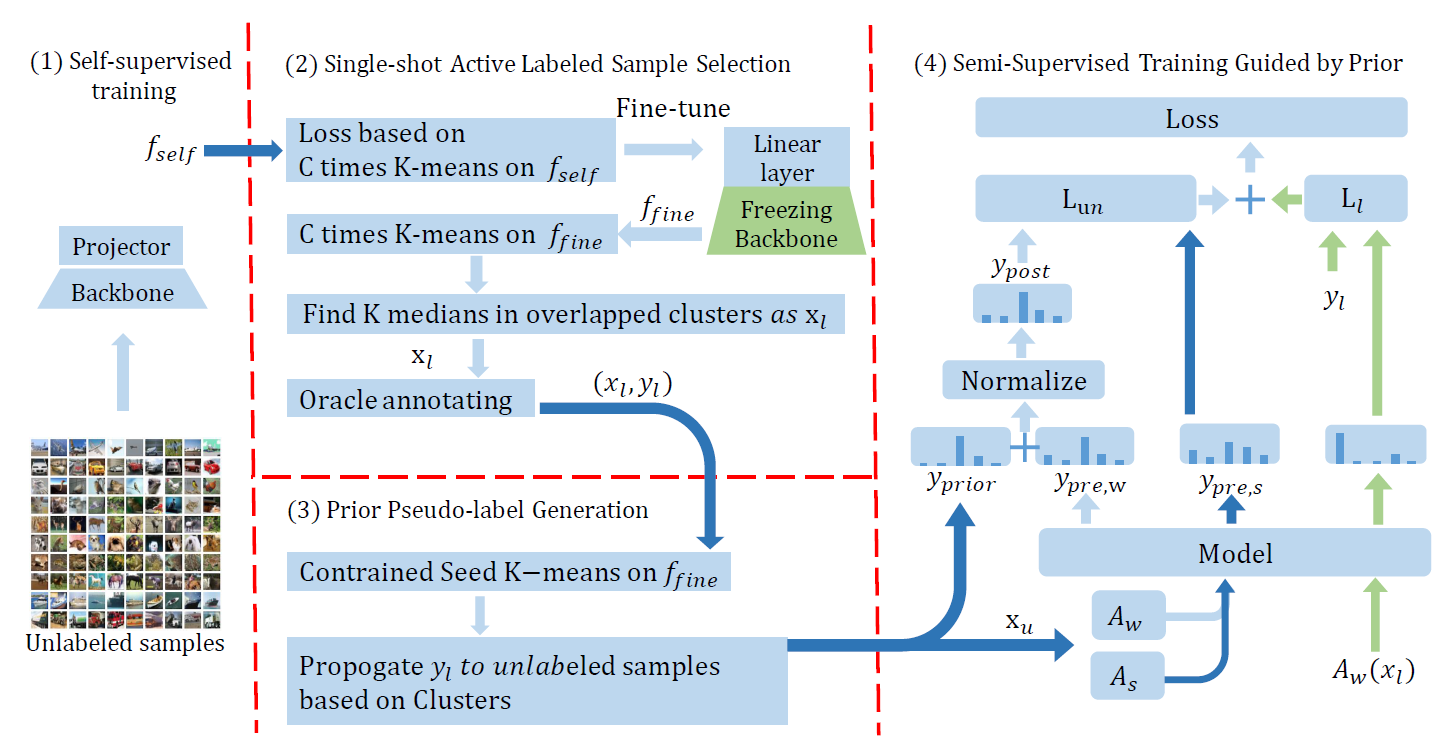

- Weight initialization struggles to effectively transfer valuable information obtained from self-supervised training to the semi-supervised model

- Label propagation on pre-trained features to construct prior pseudo-labels, serving as an effective intermediary to transfer information from self-supervised learning to semi-supervised models.

- Adapt active learning to improve the accuracy of prior pseudo-labels.

Recommended citation: Ziting Wen, Oscar Pizarro, and Stefan Williams. "Active self-semi-supervised learning for few labeled samples." Neurocomputing (2024): 128772.

Download Paper